Introduction

Xous is a microkernel operating system with processes, threads, and messages. It is designed to have an extremely small kernel that delegates as much as possible to userspace. This book describes the operating system kernel as well as the services that support normal operating system behavior.

As this book is a work in progress, some chapters are placeholders and will appear blank.

The book is written for two audiences: kernel maintainers, and application developers.

Chapters 2 (Server Architecture), 3 (Introducing the Kernel), and 5 (System Startup) are primarily for kernel maintainers and system programmers.

Chapters 1 (Getting Started), 4 (Renode Emulation), 6 (Build System Overview), 7 (Messages) and 8 (Graphics) are more appropriate for application developers.

Chapter 9 (PDDB) covers the Plausibly Deniable DataBase, and has sub-sections for both kernel and application developers.

Getting Help on Devices

This book focuses on the Xous kernel.

- Precursor users please visit our wiki. You can also join our Matrix community and chat with our community members for help.

- Baochip users please join our discord and also see Getting started with Baochip Targets. There is also a

vscodeextension namedbaochip.

Architecture

Xous is a collection of small, single purpose Servers which respond to Messages. The Xous Kernel delivers Messages to Servers, allocates processing time to Servers, and transfers memory ownership from one Server to another. Every Xous Server contains a central loop that receives a Message, matches the Message Opcode, and runs the corresponding rust code. When the operation is completed, the Server waits to receive the next Message at the top of the loop, and processing capacity is released to other Servers. Every service available in Xous is implemented as a Server. Every user application in Xous is implemented as a Server.

Architecturally, Xous is most similar to QNX, another microkernel message-passing OS.

Servers

There are only a few "well known" Servers which are always available to receive Messages, and run the requested Opcode:

- The

xous-name-servermaintains a list of all registered Servers by name, and guards a randomized 128-bit Server ID for each of the Servers. The xous-name-server arbitrates the flow of Messages between Servers. - The

ticktimer-serverprovides time and time-out related services. - The

xous-log-serverprovides logging services. - The

timeserverpublicprovides real-time (wall-clock time) services. It is only accessed viastd::timebindings.

The remaining servers are not "well known" - meaning that the xous-name-server must be consulted to obtain a Connection ID in order to send the Server a Message. Such Servers include aes com dns gam jtag keyboard llio modals net pddb trng.

Messages, aka IPC

Every Message contains a Connection ID and an Opcode. The Connection ID is a "delivery address" for the recipient Server, and the Opcode specifies a particular operation provided by the recipient Server. There are two flavours of messages in Xous:

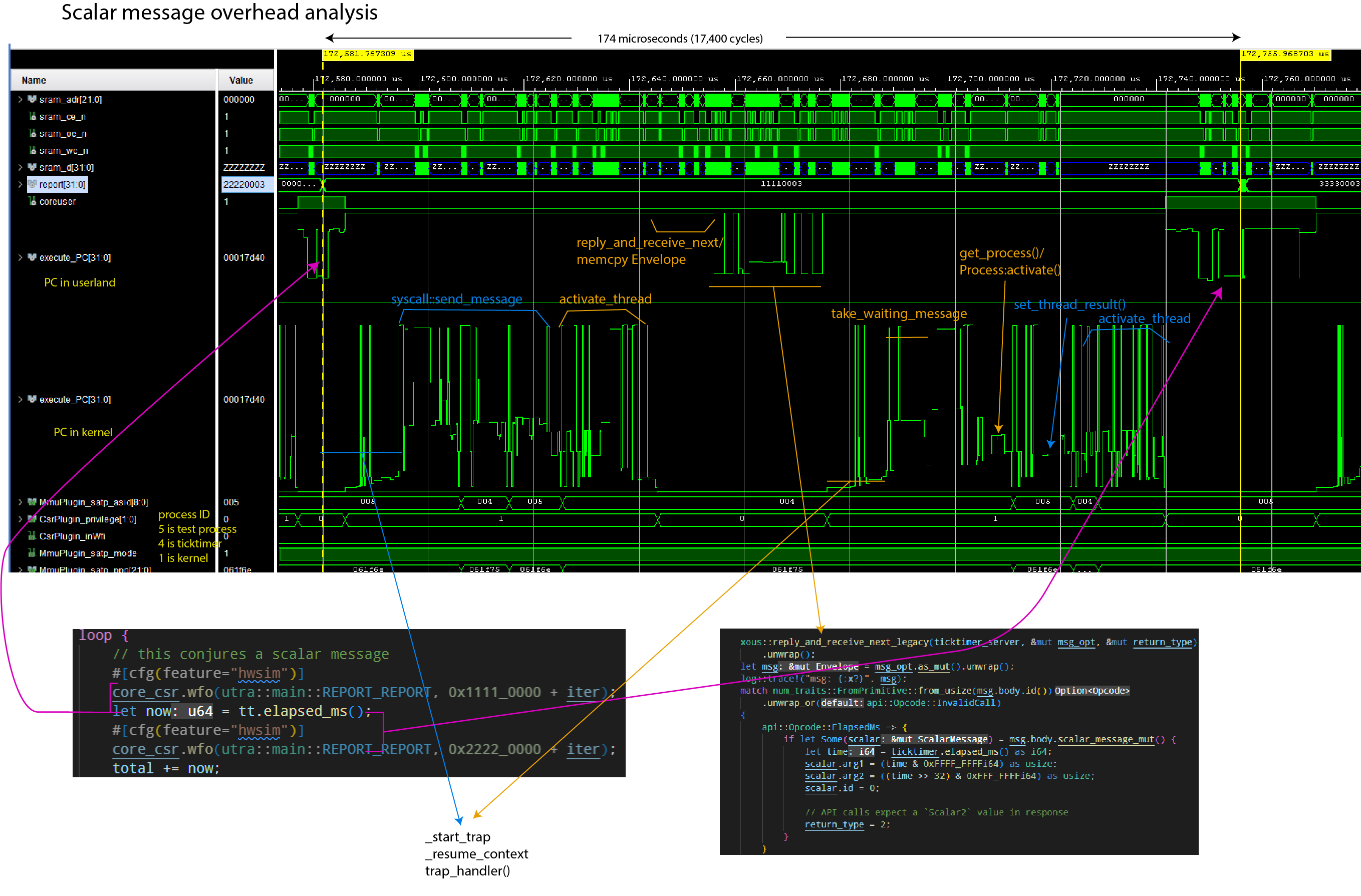

- Scalar messages are very simple and very fast. Scalar messages can transmit only 4 u32 sized arguments.

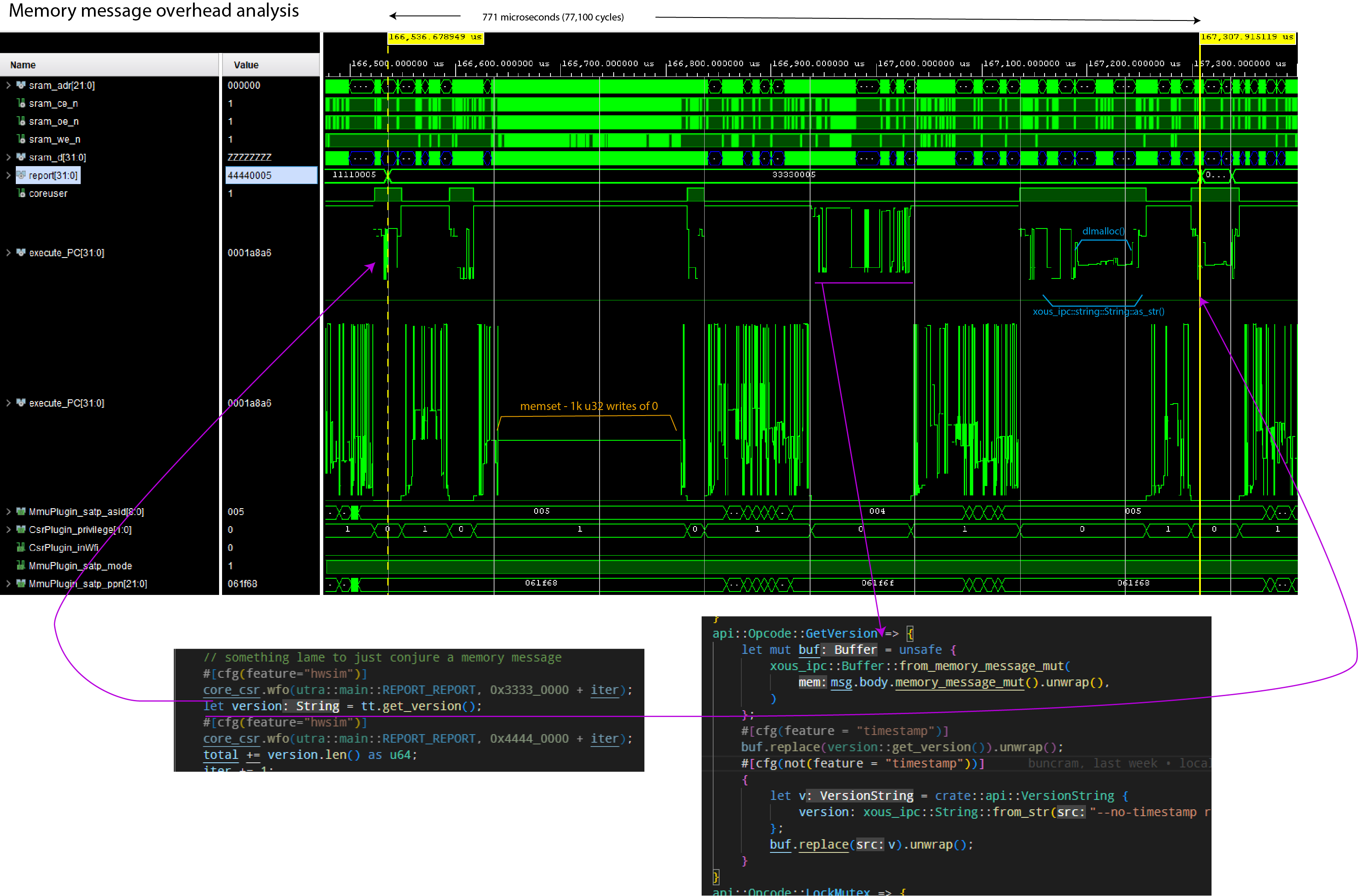

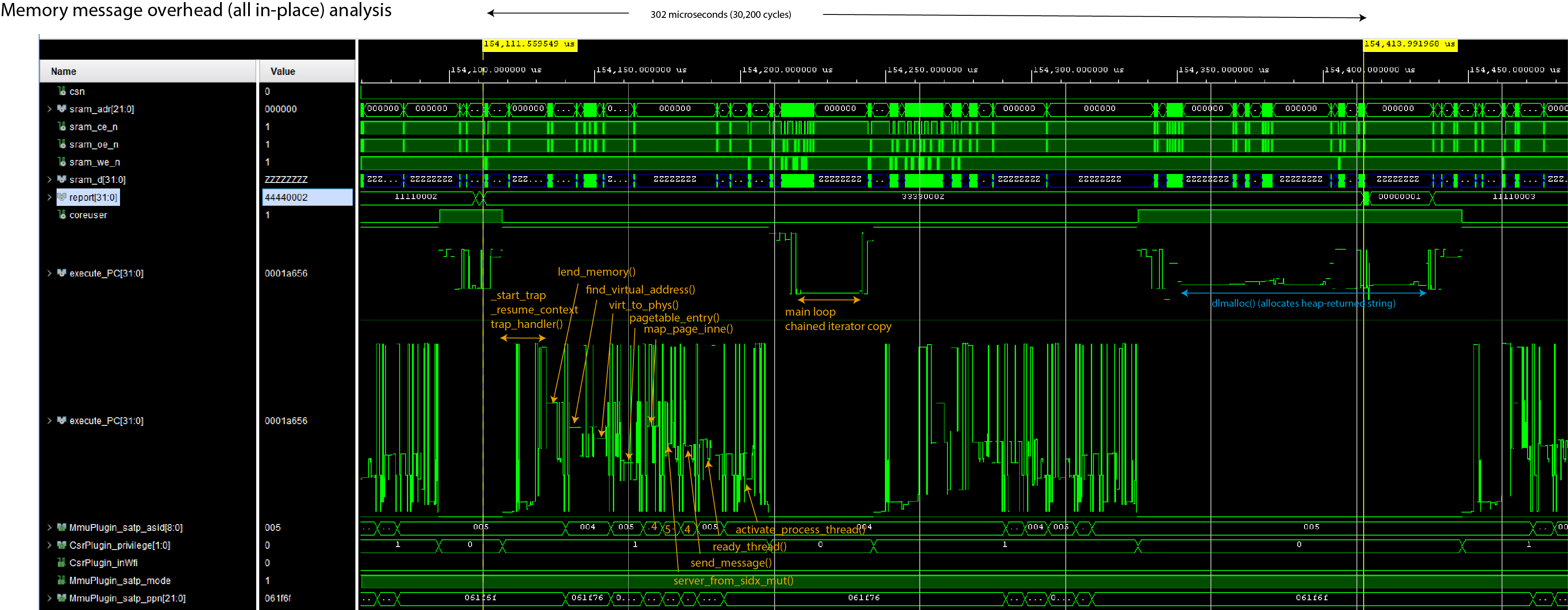

- Memory messages can contain larger structures, but they are slower. They "transmit" page-sized (4096-byte) memory chunks.

Rust structs need to be serialized into bytes before they can be passed using Memory Messages. Xous provides convenience bindings for rkyv, so any struct fully-annotated with #[derive(rkyv::Archive, rkyv::Serialize, rkyv::Deserialize)] can be serialized into a buffer by the sender and deserialized by the recipient.

The most simple Server communication involves a non-synchronizing "fire and forget" style of Messaging. The Sender sends a Message and continues processing immediately. The Recipient will receive the Message when it arrives, and process the Opcode accordingly. End of story. The ownership of the Message memory passes from the Sender to the Recipient and is Dropped by the Recipient. While there will be a delay before the Message is received - the sequence is assured. In the code, these are referred to as either Scalar Scalar Messages or Send Memory Messages.

Alternatively, A Server can send a synchronous Message, and wait (block) until the Recipient completes the operation and responds. In this arrangement, the Message memory is merely lent to the Recipient (read-only or read-write) and returned to the Sender on completion. While the sender Server "blocks", its processing quanta is not wasted, but also "lent" to the Recipient Server to complete the request promptly. In the code, these are referred to as either BlockingScalar Scalar Messages, or Borrow or MutableBorrow Memory Messages. Borrow messages are read-only, MutableBorrow are read-write, with semantics enforced by the Rust borrow checker.

asynchronous Message flow is also possible. The Sender will send a non-synchronous Message, which the kernel will amend with a "return token". The Recipient Server will complete the operation, and then send a non-synchronous Message in reply to this return token.

A Server may also send a synchronous Message and wait for a deferred-response. This setup is needed when the recipient Server cannot formulate a reply within a single pass of the event loop. Rather, the recipient Server must "park" the request and continue to process subsequent Messages until the original request can be satisfied. The request is "parked" by either saving the msg.sender field (for Scalar messages) or keeping a reference to the MessageEnvelope (for Memory messages). Memory Messages automatically return-on-Drop, relying on the Rust borrow checker and reference counting system to enforce implicit return semantics.

Acknowledgement

This project is funded through the NGI0 PET Fund, a fund established by NLnet with financial support from the European Commission's Next Generation Internet programme, under the aegis of DG Communications Networks, Content and Technology under grant agreement No 825310.

|

Getting Started

The wiki is going to be the most up-to-date source of information for getting started, as it is still a topic in flux.

Below are some excerpts from the Wiki, but some links may be out of date.

Update Your Device

- Updating your device.

- Videos: Install a debug cable; Assemble a Limited Edition

- Bleeding-edge binaries can be found at the CI server. Use at your own risk.

- Releases: please check the corresponding README within each subdir for notes.

Setting up Security

- Inspecting your mainboard

- Initialize Root Keys on "factory new" devices, by selecting the item from the main menu.

- (Optional) Burn Battery-Backed RAM keys Note: do not use this if you plan to store long-term secrets on the device.

Jargon

- Jargon: Confused by terms like SoC and EC? You're not alone.

Other Issues

Pre-Boot & Security

- What happens before boot? fills in the details of everything that happens before the first instruction gets run.

- "Secure Boot" and key ROM layout

- eFuse/BBRAM FPGA key FAQ

Between the Software and Hardware: Hardware Abstractions

- UTRA Hardware register access abstraction for Xous

- Peripheral access conventions Goals for hardware register abstractions

- COM Protocol between the embedded controller (EC) and the main SoC

Hardware Documentation

- SoC register set / generated from SoC Litex Design Source

- SoC block diagram is embedded in the README for the SoC

- EC register set / generated from EC Litex Design Source

- Hardware design files PDFs of schematics are in the "mainboard-*" directories

TRNG Chronicles

- Physics and electrical design of the external Avalanche generator

- Notes on Characterization and debugging of raw sources

- On-line health monitoring

- Post-Generation Conditioning with ChaCha

Audit Trail

- crate-scraper is the beginning of a tool that helps with audit trails. It saves all the source code derived from

crates.ioto build Xous, and collates all thebuild.rsfiles into a single mega-file for faster manual inspection.

Meta-Issues

- imports How imported repositories that are not yet stand-alone crates are managed

- Emulation

- Tools Helper tools to build bootable images

- Converting Wiki Pages from ODT to Markdown

Hello, World!

The quickest "hello world" is to run Xous under emulation on your PC using hosted mode.

- Get the latest rust or run

rustup updateif you already have Rust installed. - Clone xous-core.

- Inside the

xous-coredirectory, runcargo xtask run.

Notes:

- If you have updated

rustor have tinkered withstdon your system, you can re-install thexoustarget withcargo xtask install-toolkit --force, and then runrm -r targetto force remove stale build files. - You may also need to install some additional libraries, such as

libxkbdcommon-dev. - :warning: hosted mode is literally Xous running on your local host, which means it supports more features than Xous on native hardware:

- We do not have

tokiosupport planned anytime soon. - We do not have

Filesupport in Xous; instead, we have thepddb. Netsupport in actively in development and we hope to have fairly robust support forlibstdNetbut, note thatsocket2crate (which is not part of Rustlibstd) does not recognize Xous as a supported host.

- We do not have

Running on Hardware

Baochip Users

See Getting Started with Baochip Targets.

Note that there is a vscode extension for the Baochip family of targets. Look for baochip in the extension store.

Precursor Users

- Please refer to the manifest.json documentation for integration notes

replapp demo is the starting point for users who want to interact with their device by typing commands. This demo leverages more of the Xous UX built-in frameworks.ballapp demo is the starting point for users who prefer to run close to the bare iron, getting only key events and a framebuffer for crafting games and bespoke apps.

Coding Style

Lint

Any code in main should be free of rustc lint.

Formatting

Generally, most stable APIs and crates should be formatted with the Rust formatter. However, there are some idioms in Xous code that format quite poorly with the default Rust formatter options.

We are waiting on the stabilization of more rustfmt features to define a custom

rustfmt.toml to address this before making formatting mandatory. It's been a few

years waiting for this, though, so we might just bite the bullet and run rustfmt

with +nightly and the features we need in rustfmt.toml, so that

we can have a uniform style.

Trailing whitespaces are frowned upon.

Exception Handling

Background

A mistake was made early on in defining the Xous API where all errors were propagated, even for operations that are supposed to be infalliable or there is no sensible way to handle an error if it were to arise.

The subtlety is that some errors in Rust are more like assert statements.

For example, a function that unpacks a message into a struct could fail. If such

a failure is encountered, then you'd like to see a panic showing that exact line

of code so you can fix the bug. There isn't a sensible alternative code path at run-time,

because the root cause was likely a type mismatch error.

In most of the original code base, that error would be propagated up the call

stack as an InternalError, until you get back to your main loop, at which point

the main loop just throws up its hands and reports a panic but at a line of code

several call frames away from the offending statement. Normally this problem

can be fixed by reading the stack trace from a panic-unwind, but Xous does not have

mature panic-unwind support and also the device's screen real estate is limited

so a deep call stack cannot be displayed entirely on the screen.

Recommendation

We are in the process of refactoring code from going to a "propagate all errors" rule to a "panic on infalliable failures" rule. Infallible operations include most syscalls (except ones that are explicitly fallible) and helper methods meant to transform inter-process messages and buffers into types (note that this does not include methods that receive, for example, arbitrary messages over network).

A simple .unwrap() is probably sufficient to check the results of most infalliable operations,

because the panic handler will print a panic on that line.

unwrap() may even be preferable to a .expect("helpful error message") in most

cases, because "helpful error message" takes memory to store, increases the

binary size, and in most cases the line of code where the panic happened is the most

informative part of the error message.

Any error that happen in fallible operations (timeouts, OOMs, disconnects, etc.) should be handled and/or passed up the stack.

Xous by Example

This chapter is for developers who like to see an example of how things work.

The code here is a snapshot of the baosec target development branch, including the vault2 application. It was built with the following command line:

cargo xtask baosec --no-timestamp --feature usb --feature with-pddb --feature aestests --kernel-feature debug-proc

--no-timestampsuppresses timestamp generation in the kernel, allowing for reproducible builds--feature usbspecifies that we want the USB stack features to be turned on in all services--feature with-pddbspecifies that we're using the PDDB in all our programs. Eventually this flag will be deprecated in this target as it will be assumed by default--feature aestestswas added because at the moment the documentation was written, some AES testing was also being done--kernel-feature debug-procis what allows me to generate the tables you see below. Kernel and loader features are separate from userspace application features, and thus those features have to be specified with--kernel-featureor--loader-feature, respectively.

The above command generated three binary artifacts, loader.uf2, swap.uf2, and xous.uf2. These were all copied onto the baosec board using the USB loader interface.

Processes

Here's what the process table looks like in this target image:

Process 1 state: Ready(100) TID: 2 Memory mapping: (satp: 0x80461179, mode: 1, ASID: 1, PPN: 61179000) conns:0/32 kernel

Process 2 state: Running(0) TID: 2 Memory mapping: (satp: 0x808611bf, mode: 1, ASID: 2, PPN: 611bf000) conns:3/32 xous-swapper

Process 3 state: Sleeping TID: 2 Memory mapping: (satp: 0x80c611b8, mode: 1, ASID: 3, PPN: 611b8000) conns:5/32 keystore

Process 4 state: Sleeping TID: 2 Memory mapping: (satp: 0x810611b1, mode: 1, ASID: 4, PPN: 611b1000) conns:2/32 xous-ticktimer

Process 5 state: Ready(1000) TID: 3 Memory mapping: (satp: 0x814611aa, mode: 1, ASID: 5, PPN: 611aa000) conns:2/32 xous-log

Process 6 state: Ready(100) TID: 2 Memory mapping: (satp: 0x818611a3, mode: 1, ASID: 6, PPN: 611a3000) conns:2/32 xous-names

Process 7 state: Sleeping TID: 2 Memory mapping: (satp: 0x81c6119c, mode: 1, ASID: 7, PPN: 6119c000) conns:7/32 usb-bao1x

Process 8 state: Sleeping TID: 3 Memory mapping: (satp: 0x82061195, mode: 1, ASID: 8, PPN: 61195000) conns:12/32 bao1x-hal-service

Process 9 state: Sleeping TID: 2 Memory mapping: (satp: 0x8246118e, mode: 1, ASID: 9, PPN: 6118e000) conns:5/32 modals

Process 10 state: Sleeping TID: 5 Memory mapping: (satp: 0x82861187, mode: 1, ASID: 10, PPN: 61187000) conns:8/32 pddb

Process 11 state: Sleeping TID: 3 Memory mapping: (satp: 0x82c61180, mode: 1, ASID: 11, PPN: 61180000) conns:9/32 bao-video

Process 12 state: Sleeping TID: 3 Memory mapping: (satp: 0x83061172, mode: 1, ASID: 12, PPN: 61172000) conns:10/32 bao-console

Process 13 state: Sleeping TID: 2 Memory mapping: (satp: 0x8346116a, mode: 1, ASID: 13, PPN: 6116a000) conns:18/32 vault2

Note how each process has an ID number (1-13) in this case. This corresponds to an ASID in Sv32. The kernel is PID 1.

Each process has a state (Ready, Running, Sleeping), along with the currently scheduled TID or thread-ID.

Furthermore, each process has up to 32 connections that it can make to other servers.

Note that PID 1-11 are located in xous.uf2, whereas PID 12 and 13 are located in swap.uf2. There's no way to distinguish this from the kernel's perspective once the system is up and running, but it's important to note because copying just one of the two images can lead to ABI incompatibilities between the applications in swap.uf2 and the core services in xous.uf2.

Servers

Here's the list of servers:

idx | pid | process | sid

--- + --- + -------------------- | ------------------

0 | 5 | xous-log | SID([73756f78, 676f6c2d, 7265732d, 20726576])

1 | 6 | xous-names | SID([73756f78, 6d616e2d, 65732d65, 72657672])

2 | 7 | usb-bao1x | SID([4c403d60, 972d79bb, febdf19d, a4d054cf])

3 | 10 | pddb | SID([8e004f62, 521e147d, bcb60ce, a6564b06])

4 | 8 | bao1x-hal-service | SID([1c711b06, 56546446, c7dd75de, 2952465e])

5 | 9 | modals | SID([2597c356, c6c3a4df, 1bde0f1, 42437b9a])

6 | 11 | bao-video | SID([724e4018, 83d15c9e, a1954161, 499574f6])

7 | 8 | bao1x-hal-service | SID([6279656b, 6472616f, 756f625f, 7265636e])

8 | 2 | xous-swapper | SID([982b7882, eb5d20a2, 53f79ccb, 44d37d10])

9 | 3 | keystore | SID([1df38619, 790f559b, e1d4c54d, bec5c5e9])

10 | 4 | xous-ticktimer | SID([6b636974, 656d6974, 65732d72, 72657672])

11 | 12 | bao-console | SID([fa38d1ef, 9fbe4c20, 4d1223ea, 216750de])

12 | 13 | vault2 | SID([db457098, edc561f1, f7c07970, 9d3a5509])

13 | 8 | bao1x-hal-service | SID([b75041bd, 1c527620, 2b7b709d, 6fd0c190])

14 | 8 | bao1x-hal-service | SID([656d6974, 76726573, 75707265, 63696c62])

15 | 8 | bao1x-hal-service | SID([f97d0308, 5804dfc7, f2a324a5, c61af8a1])

16 | 12 | bao-console | SID([1b58af85, 703ceb4, 459ff738, 29425226])

17 | 7 | usb-bao1x | SID([5b658ac3, e2b2f065, dfc85a, 49b626cd])

18 | 10 | pddb | SID([9fe99d87, 686aaa29, dd63834a, f31ab336])

19 | 11 | bao-video | SID([2b65be06, c6774932, f19a8610, 92cba265])

20 | 13 | vault2 | SID([25b43d0f, 7c9e09d5, a11ada33, 675a43a2])

21 | 13 | vault2 | SID([cb57f1d5, 424ebe49, fd835279, cea1190b])

22 | 13 | vault2 | SID([bdb3ed67, 9cad154, 7940ec72, 2eaae5a4])

23 | 13 | vault2 | SID([519f2270, fd0bafb9, b270c3e3, a07d138d])

24 | 13 | vault2 | SID([b7de855c, 8ed779f7, 29ff9d7, e6514744])

25 | 13 | vault2 | SID([7c38c99e, bfc80fd4, 1e9bf375, a377920])

26 | 13 | vault2 | SID([3cebb08d, 17979315, 1ebd1cf8, f3569a0])

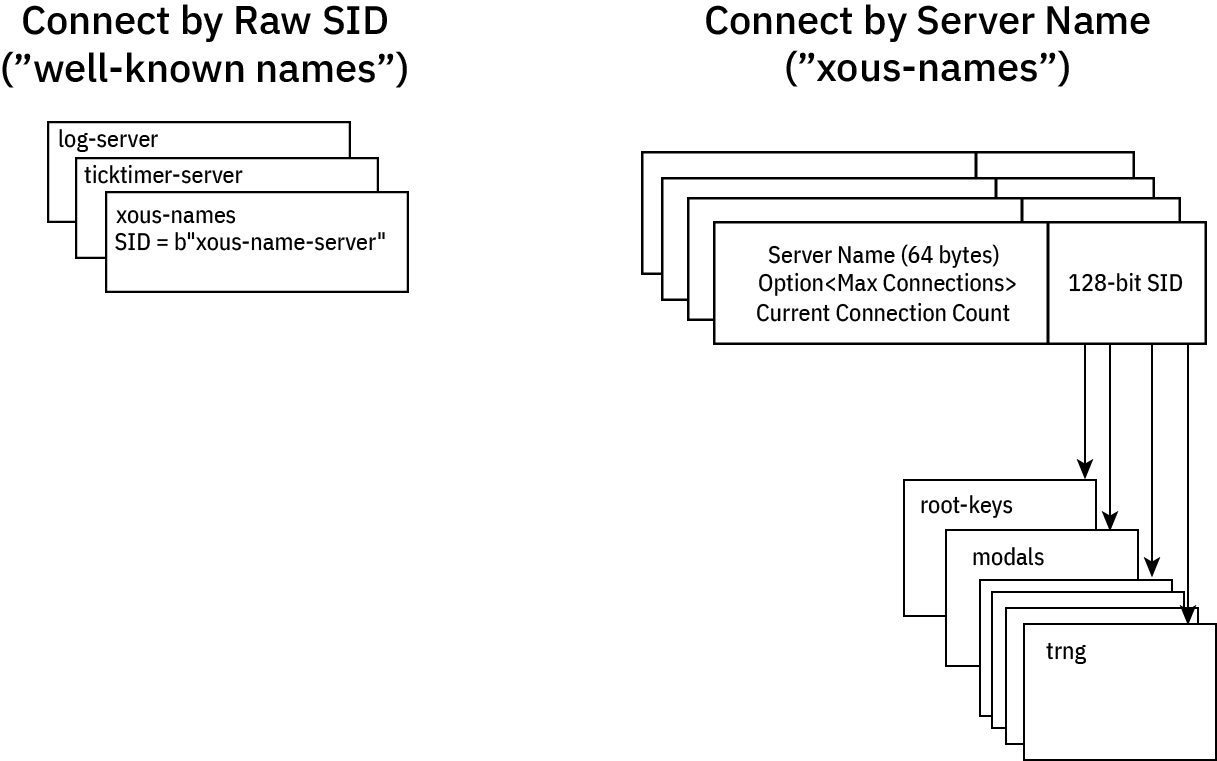

Here you can see the list of servers allocated in the system. The SID is a 128-bit number. It can be either a

cryptographically random number provided by the kernel, or a well-known number specified by the server itself.

The choice is up to the server: some services want to be connectable by everyone, and so they use a fixed

SID; others are meant to be secret and thus use the random SID.

For example, the hex numbers for the xous-log SID correspond to the ASCII string b"xous-log-server ", whereas

the SID for some of the vault2 servers are just random numbers.

xous-names is a lookup service that can go from a descriptive, free-form utf-8 string to a SID, should a server have

registered its SID with xous-names (not all SIDs need to be registered with xous-names, and in fact servers

are free to exchange SIDs directly for the highest level of undiscoverability). xous-names can implement a TOFU-style

access control, allowing a balance of discoverability and security.

Interrupt Handlers

Interrupt handlers are dynamically registered by various services in Xous. Here's the list of handlers in this target:

Interrupt handlers:

IRQ | Process | Handler | Argument

1: usb-bao1x @ 2ff20 Some(20001980)

2: bao1x-hal-service @ 2a754 Some(20000758)

5: xous-log @ 19212 Some(20000008)

8: bao-video @ ad0dc Some(601f2d80)

10: bao1x-hal-service @ 23d4c Some(7ffffc2c)

11: keystore @ 25ace Some(20000008)

20: xous-ticktimer @ 1f7b4 Some(7ffffc30)

30: bao1x-hal-service @ 23cd0 Some(60009000)

The IRQ number corresponds to a hardware IRQ number (this is specific to the VexRiscv implementation). The process is the name of the process that has registered a handler for the IRQ; the hex number is the offset in process .text region where the handler code is located, and the Argument is the one 32-bit number that's passed to the interrupt handler that gives it some context to handle the interrupt.

RAM Usage

This gives an idea of the RAM usage of various processes:

RAM usage:

PID 1: 160 k kernel

PID 2: 364 k xous-swapper

PID 3: 60 k keystore

PID 4: 48 k xous-ticktimer

PID 5: 64 k xous-log

PID 6: 52 k xous-names

PID 7: 68 k usb-bao1x

PID 8: 84 k bao1x-hal-service

PID 9: 48 k modals

PID 10: 260 k pddb

PID 11: 64 k bao-video

PID 12: 244 k bao-console

PID 13: 484 k vault2

2000 k total

This system has swap memory. Here you can see the swapper itself actually uses a good chunk of the available 2048kiB of memory in the system; all its pages have to stay resident so that it can manage memory faults without re-entrant swaps. PID's 1-11 in this system are XIP: they are written directly to RRAM, and thus the only RAM consumption is for the stack + heap. Also, processes that are not currently running have most of their data swapped out (such as usb-bao1x in this case).

bao-console and vault2 are swap-located programs. These occupy a larger memory footprint in part because they are actively running and doing things, thus their working set is swapped in; but they also have a larger memory footprint in that any code that runs has to be RAM-resident because they are swap programs.

Glossary & Jargon

bao1x - The Baochip-1x SoC.

baosec - Internal development name of a fully-featured hardware password manager based on the bao1x SoC.

betrusted-soc - The name of the FPGA-based SoC that powers Precursor

BDMA - BIO DMA (Direct Memory Access). DMA mediated by a BIO CPU.

BIO - Bao I/O. A quad-core Risc-V, register-mapped I/O processor with register-mapped inter-processor communication FIFOs.

blocking - A message type that stalls program execution until it returns.

boot0 - On bao1x, a small, indelible code stub that sets up initial security and checks the validity of boot1.

boot1 - On bao1x, an updateable machine-mode program that mediates firmware updates, audits and security.

CID - A connection ID. In order to keep SIDs secret, the CID is effectively a lookup table to SIDs that is maintained by the kernel.

dabao - A breakout board for the bao1x targeted at developers and system integrators.

Drop - A trait in Rust that is called when an object goes out of scope. Frequently used in Xous to automatically respond to blocking message types.

client - Any program attempting to talk to a server. Servers can be clients of other servers. Even the kernel can be a client of another server.

connection - A routable path between a client and a server. There can be up to 32 connections per PID.

HAL - Hardware abstraction layer.

hosted mode - An emulation mode for Xous where the kernel runs on your local PC

keystore - A userspace service that manages security keys for the bao1x platform. Must be at PID 3 when present. In Sv32 mode, the underlying hardware is aware of the current PID and will deny access to data slots if the wrong PID is running. Note that bootloaders have free reign of the system.

lend - In the context of memory messages, a page of memory that has been mapped into a server's address space and marked as read-only. The page is returned to the caller when the message is Dropped by the server.

loader - A program that runs in machine mode that copies the initial process set and sets up virtual memory for the kernel.

memory message - A message that uses virtual memory pages to communicate. A full page of memory is mapped into the target server's process space. The page can be send, lend, or mutable lend type.

message - An abstract type that is used to communicate between clients and servers. Messages can be scalar or memory, blocking and nonblocking.

mutable lend - In the context of memory messages, a page of memory that has been mapped into a server's address space and marked as read/write. The page (and any updated data within) is returned to the caller when the message is Dropped by the server.

nonblocking - A message type that continues execution without waiting for the receiver to acknowledge receipt. Basically, async communications. Scalar messages are assumed to be non-blocking unless specified as blocking.

page - The smallest mappable unit of memory in Sv32. In Xous, this is set to 4096 bytes.

Precursor - A fully-featured system for password management built around the betrusted-soc FPGA design. See precursor.dev.

scalar message - A message whose arguments fit entirely within the available registers of the CPU (maximum of 5, 32-bit arguments). Scalar messages are distinguished from memory messages in that they are much faster to send and return.

send - In the context of memory messages, a page of memory that has been mapped into server's address space with no expectation of return. The page now belongs to the target server forever, until it Drops the page.

thread - A scheduled unit of computation. Each PID can have up to 30 threads. Note that thread 0 is always present and it is the interrupt handler thread.

TID - The ID number of a thread. TID 0 is the interrupt handler thread.

PID - The process ID. This defines an isolated virtual memory name space. There can be up to 255 PIDs in Xous, where PID 1 is always the kernel (PID 0 is not valid). As a rule, each service is located in its own PID, but for tightly coupled services more than one can be packed into a PID.

server - A routable messaging endpoint, specified with a 128-bit server ID. The server ID may be randomly generated, in which case, it is considered secret and not directly discoverable; it has to be looked up and approved via xous-names.

service - A collection of servers that provide a service. Generally confined to a single PID. As a microkernel, many functions traditionally provided by a monolithic kernel are actually provided by userspace services. Examples: ticktimer, xous-names.

Sv32 - The RISC-V standard for virtual memory using 32-bit addresess and 2-level page tables.

target (Rust) - A compiler specification in the form of <architecture>-<vendor>-<os>-<abi>. Example: riscv32imac-unknown-xous-elf

target (Xous) - A specific combination of board & application software, yielding a binary image that can be programmed onto the board. Examples: baosec, dabao, app-image.

ticktimer - The equivalent of a scheduler in monolithic kernels. ticktimer implements sleeps, mutex's, and elapsed time sense for the overall system.

TOFU - Trust On First Use. A method for limiting access where the first contact is considered to be the only trusted contact: requires a strong assumption that the boot environment is safe.

xous-log - A service that provides console logging and interaction.

xous-names - A lookup service that can translate free-form utf-8 strings that name a service into CIDs. Internally, xous-names maintains a lookup table between the server name and its SID, along with a count of connections to the server. It can enforce a maximum connection limit, enabling a TOFU-style access control between trusted and untrusted services.

xous-swapper - A userspace service that manages swap memory on behalf of the kernel. Must always be at PID 2 when present.

Server Architecture

This chapter is written for kernel maintainers.

Application programmers should see caller idioms and messages for details on how to use servers.

What is the difference between a Thread and a Server?

A Server is a messaging construct, whereas threads refer to execution flow. You can have a thread without a Server, and you can have multiple Servers referenced in a thread.

Threads in Xous are conventional: just a PC + stack that runs in a given process space. A single process can have up to 32 threads, and each thread will run until their time slice is up; or they yield their time slice by either explicitly yielding, or blocking on something (such as a blocking message).

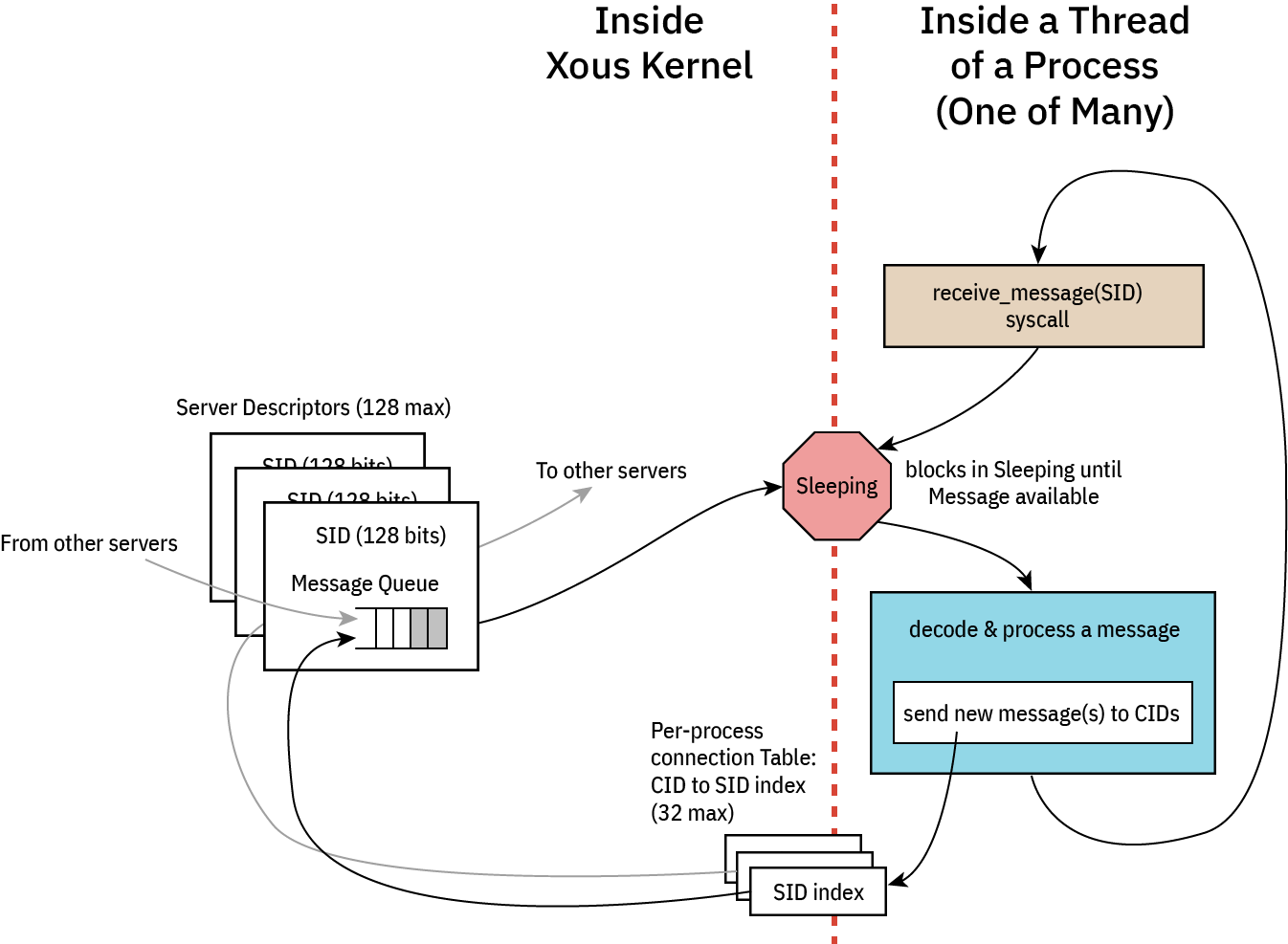

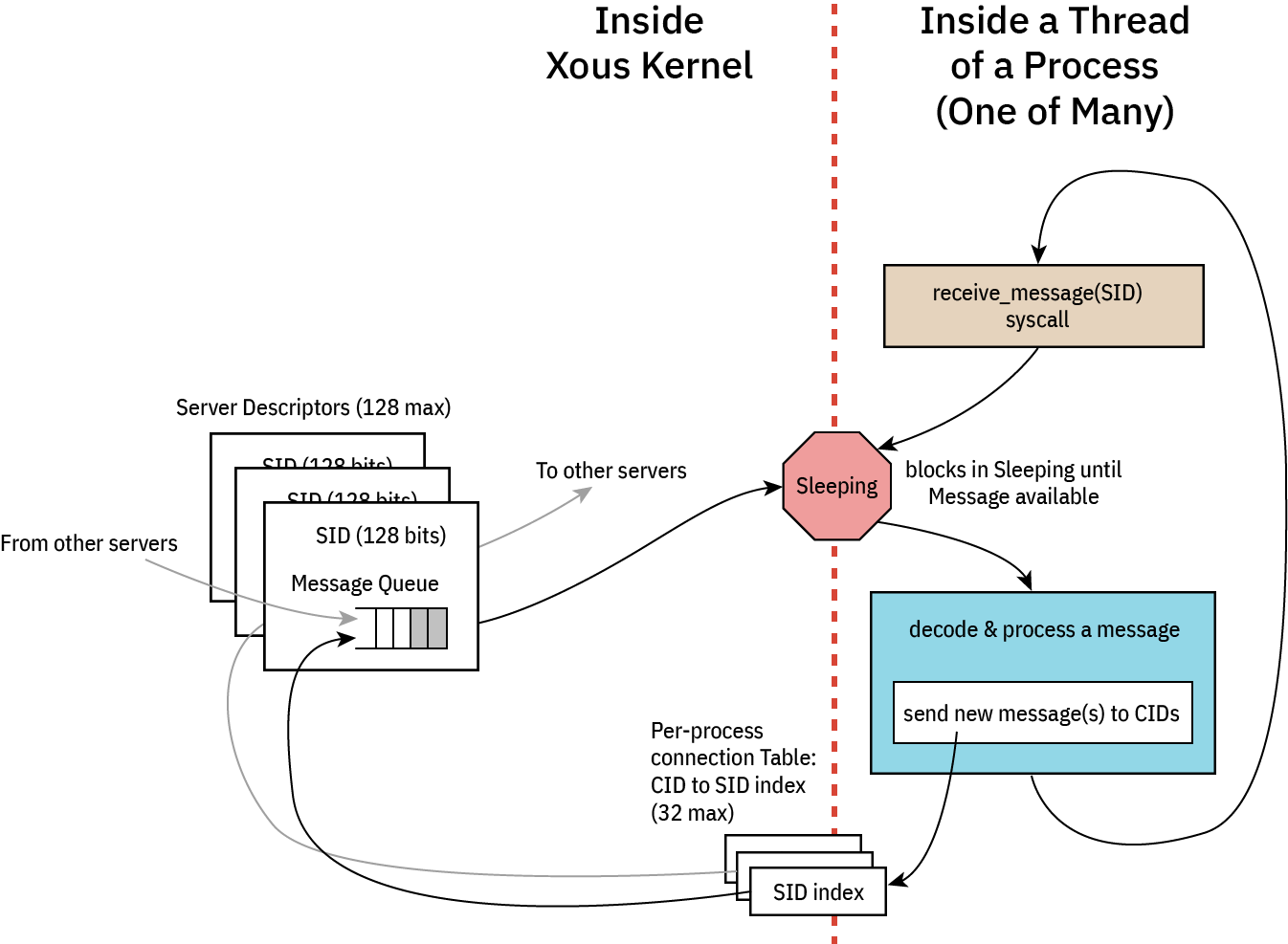

Blocking messages dovetails into the concept of servers: in Xous, a server is basically just a 128-bit ID number that servers as a mailbox for incoming messages. There is a limit of 128 servers in Xous across all processes and threads. Within that limit, one can allocate all the servers they want for a given thread, although it's not terribly useful to do that.

Messages specify a 128-bit server ID as a recipient; and, the typical idiom (although it doesn't have to be this way) is for a thread to wake up, initialize, allocate a server ID, and then wait for a message to arrive in its inbox.

If no message arrives, the thread consumes zero time.

Once a message arrives, the thread will be unblocked to handle the message when its quantum comes up. The thread may receive a quantum through the normal round-robin pre-emptive scheduler, but it could also receive a quantum in the case that a blocking message is sent from another thread. What happens then is the sender yields the remainder of its time to the receiving server, so that the message may be handled immediately.

As a counter-example, a "valid" but not recommended way to communicate between threads in Xous is to do something like:

let sem = Arc::new(AtomicBool::new(false));

thread::spawn(

let sem = sem.clone();

move || {

loop {

if sem.load(Ordering::SeqCst) {

// do something useful here

// do it just once

sem.store(false, Ordering::SeqCst);

} else {

// we could be nice and yield our quantum to another thread

xous::yield_slice();

// but even if you forget to yield, eventually, the pre-emptive

// scheduler will stop polling and allow another thread to run.

}

}

}

);

// Later in the parent thread, use this to trigger the child thread:

sem.store(true, Ordering::SeqCst);The "bad example" above starts a thread that just polls the sem variable until it is true before a one-shot execution of the thing it's supposed to do.

The problem with this construction is that it will constantly run the CPU and always take a quantum of time to poll sem. This is very inefficient; it actually burns more battery, and has a material impact on user experience to do it this way.

A more "Xous" way to do this would be:

let sid = xous::create_server().unwrap();

let conn = xous::connect(sid).unwrap();

thread::spawn(

// sid automatically clones here

move || {

loop {

let msg = xous::receive_message(sid).unwrap();

// typically one would decode an opcode from the message body, so you can dispatch more than one function.

let _opcode: Option<ActionOp> = FromPrimitive::from_usize(msg.body.id());

// but in this case, we have exactly one thing, so:

// do something useful here

// indicate that we got our thing done

xous::return_scalar(msg.sender, retval).unwrap();

}

}

);

// Later in the parent thread, use this to trigger the child thread:

xous::send_message(conn,

Message::new_blocking_scalar(0 /* this is the opcode field */,

0, 0, 0, 0) // up to 4 "scalar" arguments can be sent as well

).unwrap();The above send_message() would yield the remaining quantum of time for the parent thread, and dispatch into the child thread. The child would receive the message, do "something useful" and return a value to the caller. Assuming there was still time left in the quantum, this return_scalar would return execution back to the parent thread!

If the message was not blocking, the parent thread would continue executing until its quantum is completed, and the child thread would handle the message only then. Assuming the child thread can handle the response very quickly, it would yield the remainder of its quantum once it completed doing "something useful" and it returned to the top of its loop where it calls receive_message(), and found its input queue to be empty.

Thus, Xous is carefully coded such that everything blocks if it's not being used, using the idiom above. Crates like crossbeam are implemented using condvar which internally uses servers and messages to ensure that blocking waits are efficient.

So, in general, if the CPU load bar is pegged to 100% and nothing is "going on" (perhaps just a spin-wait), it's considered a bug.

Synchronization Primitives

Synchronization primitives are provided via the Ticktimer Server. This includes mutexes, process sleeping, and condvars.

Thread Sleeping

Thread sleeping is a primitive that is implemented by the ticktimer server.

This takes advantage of the fact that a sender will suspend a thread

until a BlockingScalar message is responded to.

In order to suspend a thread, simply send a BlockingScalar message

to the ticktimer server with an id of 1 and an arg1 indicating

the number of milliseconds to sleep.

If you need to sleep for more than 49 days, simply send multiple messages.

Mutex

Mutexes allow for multiple threads to safely access the same data. Xous Mutexes have two paths: A fast path, and a slow path. Non-contended Mutexes traverse the fast path and will not need a context switch. Contended Mutexes will automatically fall back to the slow path.

The core of a Mutex is a single AtomicUsize. This value is 0 when

the Mutex is unlocked, and nonzero when it is locked.

Mutex: Locking

Locking a Mutex involves a simple try_lock() operation. In this

operation, atomic instructions are used to replace the value 0

with the value 1, failing if this is the case.

pub unsafe fn try_lock(&self) -> bool {

self.locked.compare_exchange(0, 1, SeqCst, SeqCst).is_ok()

}If the Mutex is locked, then the current thread will call yield_slice()

which hands execution to another thread in the current process in the hope

that the other thread will release its mutex.

This currently occurs three times.

If the lock still cannot be locked, then it is "poisoned". Instead of

swapping 0 for 1, the thread does an atomic Add of 1 to the current

value. If the resulting value is 1 then the lock was successfully

obtained and execution may continue as normal.

However, if the value is not 1 then the process falls back to the Slow Path. This involves sending a BlockingScalar to the ticktimer

server with an id of 6 and arg1 set to the address of the Mutex.

Mutex: Unlocking

Unlocking a Mutex on the Fast Path simply involves subtracting 1 from

the Mutex. If the previous value was 1 then there were no other

threads waiting on the Mutex.

Otherwise, send a BlockingScalar to the ticktimer server with

an id of 7 and arg1 set to the address of the Mutex.

Condvar

Condvar is Rust's name for "conditional variables". Broadly speaking, they are instances where one thread takes an area of memory and says "Wake me up sometime in the future." A different thread can then say "Wake up one other thread that's waiting on this object." Or it can say "Wake up all other threads that are waiting on this object."

Condvar: Waiting for a Condition

To suspend a thread until a condition occurs, or until a timeout hits,

allocate an area of memory for the condvar. Then send a BlockingScalar

message to the ticktimer server with an id of 8. Set arg1 to the

address of the condvar.

In order to add a timeout, set arg2 to the number of milliseconds to

wait for. Times longer than 49 days are not supported, so multiple

calls will be required. If no timeout is required, pass 0 for arg2.

Condvar: Signaling Wakeups

To wake up another thread, send a BlockingScalar message to the ticktimer

server with an id of 9, and set arg1 to the address of the condvar.

arg2 should contain the number of blocked threads to wake up.

In order to wake only one thread, pass 1.

Introducing the Kernel

Xous is a microkernel design that tries to keep as little as possible inside the main kernel. Instead, programs can start "Servers" that processes can connect to in order to accomplish a task.

Processes are isolated, and therefore an MMU is strongly recommended. One process can have multiple threads, and processes cannot interact with one another except by passing Messages.

A Message is a piece of data that can be sent to a Server. Messages contain one usize ID field that may be used to identify an opcode, and may additionally contain some memory or some usize scalars.

Additionally, a Message may be either blocking, in which case it will wait for the Server to respond, or non-blocking, where they will return immediately.

"Drivers" are really just Servers. For example, to print a string to the console, send a StandardOutput (1) opcode to the server "xous-log-server " with a &[u8] attached to some memory. The process will block until the server is finished printing.

The entire Xous operating system is built from these small servers, making it easy to work on one component at a time.

Memory and mapping

Memory is obtained by issuing a MapMemory syscall. This call can optionally provide a physical address to map. If no memory is specified, a random physical page is provided. The process has no way of knowing the physical address of the page.

If the caller allocates memory from the primary region, it will be zeroed. If it allocates memory from an ancillary region such as a registor or a framebuffer, then that memory will not be initialized.

Processes can use this to allocate memory-mapped regions in order to create drivers.

Interrupts

Processes can allocate interrupts by calling the ClaimInterrupt call. If an interrupt has not been used, then that process will become the new owner of that interrupt. This syscall requires you to specify an address of a function to call, and you may optionally provide an argument to pass to the function handler.

There is no way to disable interrupts normally, except by handling an interrupt. That is, interrupts are disabled inside of your interrupt handler, and will be re-enabled after your interrupt handler returns.

There are a very limited set of functions that may be called during an interrupt handler. You may send nonblocking Messages and allocate memory, for example. However you may not Yield or send blocking Messages.

A common pattern for "disabling interrupts" is to come up with an interrupt that does nothing but trigger on-demand and handling the requisite code in that function. This is used by the suspend/resume server, for example, in order to ensure nothing else is running when the system is powering down.

Supported Platforms: RISC-V 32 and Hosted

Xous currently supports two platforms: RISC-V 32 and Hosted mode.

RISC-V 32 is the hardware that ships in Betrusted and Precursor, and is what is available in the Renode emulator.

An additional platform is Hosted mode, which targets your desktop machine. This can be used to debug builds using desktop-class debuggers such as rr or even just gdb. You can also use profilers in order to discover where code performance can be improved.

Hosted mode is discussed in more detail later

Memory Management in Xous

Memory is allocated with the MapMemory syscall. This call accepts four arguments:

- physical:

Option<NonZeroUsize>: The physical address you would like to allocate. SpecifyNoneif you don't need a particular address. - virtual:

Option<NonZeroUsize>: The virtual address you would like to allocate. SpecifyNoneif you don't need a particular virtual address. - size:

NonZeroUsize: The size of the region to allocate. This must be page-aligned. - flags:

MemoryFlags: A list of platform-specific flags to apply to this region.

The memory will return a MemoryRange that encompasses the given region.

You can free memory with UnmapMemory, though be very careful not to free memory that is currently in use. UnmapMemory simply takes the MemoryRange returned by MapMemory.

Physical Addresses

A program rarely needs to access physical addresses, and in most operating systems it's not the kind of thing you can actually do. However, Xous is designed to be embedded, so it's entirely legal to request a physical address.

The trick is that you can only request physical addresses that actually exist. For example, you cannot request a physical address for a mirrored region of a peripheral because that is not a valid address.

If you request a physical address from main RAM, the memory will be zeroed when you receive it. Peripherals and ares that are not in main RAM will not be zeroed. It is for this reason that system services are recommended to claim all peripherals before running user programs.

Virtual Addresses

All Xous programs run with virtual memory enabled. Attempting to perform an illegal operation will result in an exception. If you have an exception handler installed, illegal memory accesses will run this exception handler which may fix up the exception.

Demand Paging

When you allocate memory using MapMemory(None, None, ..., ...), you will be handed memory from the DEFAULT_BASE. This memory will not be backed by a real page, and will only be allocated by the kernel once you access the page. This allows threads to allocate large stacks without running out of memory immediately.

Pages that are mapped-but-unallocated are visible in a process' page table view. As an example, consider the following excerpt from a page table view:

38 60026000 -> 400a3000 (flags: VALID | R | W | USER | A | D)

41 60029000 -> 40108000 (flags: VALID | R | W | A | D)

42 6002a000 -> 40109000 (flags: VALID | R | W | A | D)

43 6002b000 -> 00000000 (flags: R | W)

44 6002c000 -> 00000000 (flags: R | W)

Addresses 0x60026000 (#38), 0x60029000 (#41), and 0x6002a000 (#42) are all allocated. The rest of the pages are valid-but-unallocated.

Address 0x60026000 (#38) is mapped to the process and has a valid physical address. Reads and writes to this page are backed by physical address 0x400a3000.

Addresses 0x60029000 (#41) and 0x6002a000 (#42) are still owned by the kernel, likely because they were being cleared.

Addresses 0x6002b000 (#43) and 0x6003c000 (#44) are on-demand allocated. They have no physical backing, and attempting to access them will result in a kernel fault where they will be allocated. When the page is allocated, it will be given the flags R | W in addition to default kernel flags.

The Heap

When we talk about "The Heap" we mean data that is managed by functions such as malloc. Xous has a pair of syscalls that behave vaguely like the Unix brk command.

IncreaseHeap(usize, MemoryFlags) will increase a program's heap by the given amount. This returns the new heap as a MemoryRange.

To decrease the heap by a given amount, call DecreaseHeap(usize).

Note that you must adjust the heap in units of PAGE_SIZE.

You can avoid using these syscalls by manually allocating regions using MapMemory, however they are a convenient abstraction with their own memory range.

liballoc as bundled by Xous uses these syscalls as a backing for memory.

Virtual Memory Regions

There are different memory regions in virtual address space:

| Address | Name | Variable | Description |

|---|---|---|---|

| 0x0001_0000 | text | - | Start of .text with the default riscv linker script (riscv64-unknown-elf-ld -verbose) |

| 0x2000_0000 | heap | DEFAULT_HEAP_BASE | Start of the heap section returned by IncreaseHeap |

| 0x4000_0000 | message | DEFAULT_MESSAGE_BASE | Base address where MemoryMessage messages are mapped inside of a server |

| 0x6000_0000 | default | DEFAULT_BASE | Default region when calling `MapMemory(..., None, ..., ...) -- most threads have their stack here |

| 0x7fff_ffff | stack | - | The default stack for the first thread - grows downwards from 0x8000_0000 not inclusive |

| 0xa000_0000 | swhal | SWAP_HAL_VADDR | Hardware-specific pages for the swapper. For configs that use memory-mapped swap, contains the memory mapping (and thus constrains total swap size). For configs that use register-mapped swap, contains the HAL structures for the register driver. These configurations could potentially have effectively unlimited swap. |

| 0xe000_0000 | swpt | SWAP_PT_VADDR | Swap page table roots. One page per process, contains virtual addresses (meant to be walked with code) |

| 0xe100_0000 | swcfg | SWAP_CFG_VADDR | Swap configuration page. Contains all the arguments necessary to set up the swapper. |

| 0xe100_1000 | swrpt | SWAP_RPT_VADDR | Location where the memory allocation tracker (runtime page tracker) is mapped when it is shared into userspace. |

| 0xe110_0000 | swcount | SWAP_COUNT_VADDR | Location of the block swap count table. This is statically allocated by the loader before the kernel starts. |

| 0xe800_0000 | swstack | SWAP_STACK_VADDR | Location of the swap handler context's stack pointer. Needed because swap events in TID=0 don't always have a proper stack. Builds down from this address. |

| 0xff00_0000 | kernel | USER_AREA_END | The end of user area and the start of kernel area |

| 0xff40_0000 | pgtable | PAGE_TABLE_OFFSET | A process' page table is located at this offset, accessible only to the kernel |

| 0xff80_0000 | pgroot | PAGE_TABLE_ROOT_OFFSET | The root page table is located at this offset, accessible only to the kernel |

| 0xff80_1000 | process | PROCESS | The process context descriptor page |

| 0xff90_0000 | buffer | USERSPACE_BUFFER | Location for userspace buffer mappings |

| 0xffc0_0000 | kargs | KERNEL_ARGUMENT_OFFSET | Location of kernel arguments |

| 0xffd0_0000 | ktext | - | Kernel .text area. Mapped into all processes. |

| 0xfff7_ffff | kstack | - | Kernel stack top, grows down from 0xFFF8_0000 not inclusive |

| 0xfffe_ffff | exstack | - | Stack area for exception handlers, grows down from 0xFFFF_0000 not inclusive |

In addition, there are special addresses that indicate the end of a function. The kernel will set these as the return address for various situations, and they are documented here for completeness:

| Address | Name | Variable | Description |

|---|---|---|---|

| 0xff80_2000 | retisr | RETURN_FROM_ISR | Indicates the return from an interrupt service routine |

| 0xff80_3000 | exitthr | EXIT_THREAD | Indicates a thread should exit |

| 0xff80_4000 | retex | RETURN_FROM_EXCEPTION_HANDLER | Indicates the return from an exception handler |

| 0xff80_8000 | retswap | RETURN_FROM_SWAPPER | Indicates the return from the userspace swapper code. Only available when swap feature is selected. |

Hosted Mode

Hosted mode may be built by running cargo xtask run. This causes Xous to be compiled using your native architecture rather than building for riscv32imac-unknown-xous-elf. Your native architecture is probably 64-bits, and has a lot more memory than Betrusted does. Xous also runs in userspace, which means a lot of things end up being very different in this mode.

The API is designed to abstract away these differences so that programs may run seamlessly on both Hosted and Native (RISC-V 32) mode.

The Kernel as a Process

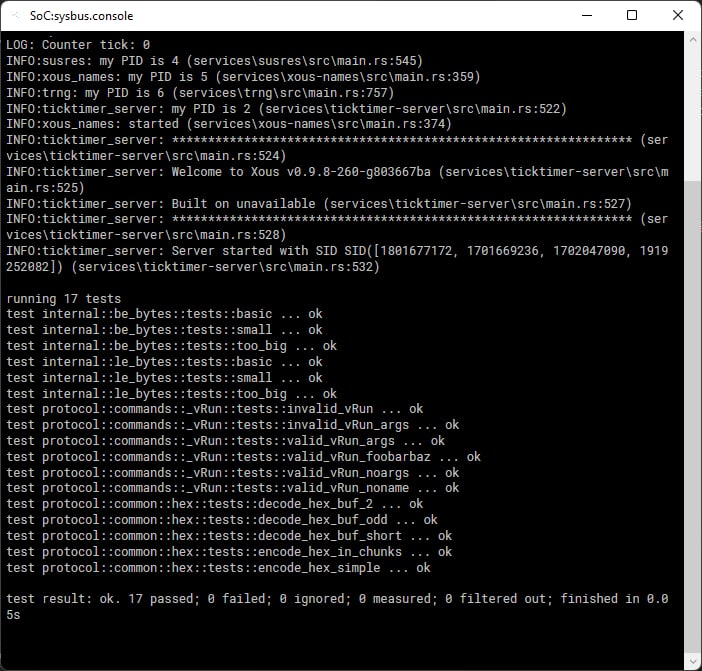

When you build processes using cargo xtask run, the kernel is compiled as an ordinary, native program. This program can be run by simply running ./target/release/kernel. If you run this by itself after running cargo xtask run, you'll see the following output:

$ ./target/release/kernel

KERNEL: Xous server listening on 127.0.0.1:1238

KERNEL: Starting initial processes:

PID | Command

-------+------------------

The kernel simply acts as a router, passing messages between processes. This poses some challenges because processes need to be able to connect to one another, and the kernel needs to be able to match a network connection to a given process. Additionally, there needs to be a list of initial processes to start.

Initial Processes

In order to get a list of initial processes, they are simply all passed on the command line. For example, we can run the kernel with a log server and see the following output:

$ ./target/release/kernel ./target/release/log-server

KERNEL: Xous server listening on 127.0.0.1:21183

KERNEL: Starting initial processes:

PID | Command

-------+------------------

2 | ./target/release/log-server

LOG: my PID is 2

LOG: Creating the reader thread

LOG: Running the output

LOG: Xous Logging Server starting up...

LOG: Server listening on address SID([1937076088, 1735355437, 1919251245, 544367990])

LOG: my PID is 2

LOG: Counter tick: 0

From this output, we can see that the kernel has started the log server for us. Multiple initial processes may be specified:

$ ./target/release/kernel ./target/release/log-server ./target/release/xous-names

KERNEL: Xous server listening on 127.0.0.1:3561

KERNEL: Starting initial processes:

PID | Command

-------+------------------

2 | ./target/release/log-server

3 | ./target/release/xous-names

LOG: my PID is 2

LOG: Creating the reader thread

LOG: Running the output

LOG: Xous Logging Server starting up...

LOG: Server listening on address SID([1937076088, 1735355437, 1919251245, 544367990])

LOG: my PID is 2

LOG: Counter tick: 0

INFO:xous_names: my PID is 3 (services/xous-names/src/main.rs:360)

INFO:xous_names: started (services/xous-names/src/main.rs:375)

Launching a Process

Processes are launched in the kernel by setting a series of environment variables and then spawning a new process. The following environment variables are currently used:

| Variable | Description |

|---|---|

| XOUS_SERVER | The IP and TCP port of the kernel |

| XOUS_PID | The unique process ID of this kernel, assigned by the Xous kernel |

| XOUS_PROCESS_NAME | The process name, currently taken from the executable name |

| XOUS_PROCESS_KEY | An 8-byte hex-encoded key that uniquely identifies this process |

A thread is created for this process to handle it and to route messages within the kernel. The XOUS_PROCESS_KEY is effectively a single-use token that is unique per process and is used to match a process within the kernel.

When the process launches it should establish a connection to the kernel by connecting to XOUS_SERVER and sending XOUS_PROCESS_KEY. This will authenticate the process with the kernel ane enable it to send and receive messages.

The initial handshake has the following layout:

| Offset (Bytes) | Size | Meaning |

|---|---|---|

| 0 | 1 | Process ID of connecting process |

| 1 | 8 | 8-byte process key |

Sending and Receiving Syscalls

In Hosted mode, syscalls are sent via a network connection. Because pointers are unsafe to send, usize is defined on Hosted mode as being 32-bits. Additionally, most syscalls will return NotImplemented, for example it does not make sense to create syscalls such as MapMemory.

Messages function normally in Hosted mode, however they are more expensive than on real hardware. Because messages get sent via the network, the entire contents of a Memory message must be sent across the wire.

Eight 32-bit values are sent, and these may be followed by any data in case there is a Memory message.

| Offset (Bytes) | Usage (Calling) |

|---|---|

| 0 | Source thread ID |

| 4 | Syscall Number |

| 8 | Arg 1 |

| 12 | Arg 2 |

| 16 | Arg 3 |

| 20 | Arg 4 |

| 24 | Arg 5 |

| 28 | Arg 6 |

| 32 | Arg 7 |

| 36 | Contents of any buffer pointed to by args |

The process should expect a return, and should block until it gets a response. When it gets a response, a memory buffer may be required that is the same size as the buffer that was sent. The contents of this buffer will be appended to the network packet in the same manner as the calling buffer. If the message is a Borrow, then this data will be the same as the data that was sent. If it is a MutableBorrow, then the server may manipulate this data before it returns.

| Offset (Bytes) | Usage (Return) |

|---|---|

| 0 | Target thread ID |

| 4 | Return type tag |

| 8 | Arg 1 |

| 12 | Arg 2 |

| 16 | Arg 3 |

| 20 | Arg 4 |

| 24 | Arg 5 |

| 28 | Arg 6 |

| 32 | Arg 7 |

| 36 | Contents of any returned buffer |

Threading

All Xous syscalls go to the kernel, however certain syscalls are simply stubs. One example of this is threading, where the kernel has no way of actually launching a thread.

The application is responsible for creating new threads, and may do so either by "sending" a CreateThread call to the kernel or by creating a native thread using std::Thread::spawn().

When launching a thread with CreateThread, the kernel will allocate a new "Xous TID" and return that to the application. The application will then launch its new thread and set the local THREAD_ID variable to this ID. This ID will be used as part of the header when sending syscalls to the kernel, and will be used to delegate responses to their waiting threads.

If an application calls std::Thread::spawn() then it will not have a THREAD_ID set. When the thread attempts to send a syscall, hosted mode will notice that THREAD_ID is None. When this occurs, Hosted mode will create a "fake" thread ID (starting at TID 65536) and call SysCall::CreateThread(ThreadInit {}) to register this new ID. Then all subsequent calls will use this fake thread ID.

Process Creation

Creating processes is a fundamental requirement of modern operating systems above a certain size. Xous supports process creation, although it does not prescribe an executable format nor does it even have a built-in loader.

Process creation arguments vary depending on the platform being targeted, making this one of the less portable aspects of Xous. All platforms support the CreateProcess syscall, however the arguments to this syscall vary widely.

Creating Processes in Hosted Mode

In Hosted mode, the ProcessArgs struct contains a full command line to be passed directly to the shell. This is actually used by the kernel during its init routine when it spawns each child process of PID 1.

Internally, the parent process is responsible for launching the process as part of the create_process_post() that gets called after the successful return of the CreateProcess syscall. As part of this, the hook sets various environment variables for the child process such as its 16-byte key stored in the XOUS_PROCESS_KEY variable, as well as the PID stored in the XOUS_PID variable.

Creating Processes in Test Mode

Test mode is a special case. Tests don't want to depend on files in the filesystem, particularly as multiple tests are running at the same time. To work around this, processes are created as threads. This is a special case intended to support heavily-parallel machines that can run all thread tests simultaneously, and is not normally used.

Creating Processes on Native Hardware (e.g. RISC-V)

Process creation on real hardware requires a minimum of six pieces of information. These are all defined in the ProcessInit struct, which gets passed directly to the kernel:

pub struct ProcessInit {

// 0,1 -- Stack Base, Stack Size

pub stack: crate::MemoryRange,

// 2,3 -- Text Start, Text Size

pub text: crate::MemoryRange,

// 4 -- Text destination address

pub text_destination: crate::MemoryAddress,

// 5 -- Entrypoint (must be within .text)

pub start: crate::MemoryAddress,

}The stack defaults to 128 kB growing downwards from 0x8000_0000.

text refers to a region of memory INSIDE YOUR PROGRAM that will be detached and moved to the child process. This memory will form the initialization routine for the child process, and should contain no .bss or .data sections, unless it also contains code to allocate and set up those sections.

text_destination describes the offset where text will be copied. This address is determined by the link address of your initialization program.

The start is the address where the program counter will start. This is the address of your program's entrypoint. It must reside within the allocated text section, beginning at text_destination.

Native Hardware Entrypoint

The entrypoint for native hardware takes four arguments. When combined, these four arguments form a Server ID that can be used for sending additional data from the parent process to the child. An example loader program might look like the following:

pub extern "C" fn init(a1: u32, a2: u32, a3: u32, a4: u32) -> ! {

let server = xous::SID::from_u32(a1, a2, a3, a4);

while let Ok(xous::Result::Message(envelope)) =

xous::rsyscall(xous::SysCall::ReceiveMessage(server))

{

match envelope.id().into() {

StartupCommand::WriteMemory => write_memory(envelope.body.memory_message()),

StartupCommand::FinishStartup => finish_startup(server, envelope),

StartupCommand::PingResponse => ping_response(envelope),

_ => panic!("unsupported opcode"),

}

}

panic!("parent exited");

}This compiles down to a very efficient program that can be used to load a larger program into the new address space. Memory is written using the WriteMemory opcode to load new pages into the nascent process, and FinishStartup is used to shut down the server and jump to the new process entrypoint.

Limitations of Created Processes

NOTE: The following is subject to fixes in the kernel, and does not currently apply. This information is presented here in order to explain oddities observed when these features are implemented.

Newly-created processes cannot create servers with a predefined Server ID. They can only create randomized servers.

Processes created using CreateProcess are not ever scheduled to run. Parent processes must donate their quantum to child processes in order for them to run. This is done with a special syscall.

When a parent process exits, all child processes will also exit. This is because those processes will not be scheduled anymore, so there's no point in letting them continue to run.

Debugging with GDB

The kernel supports enabling the gdb-stub feature which will provide a gdb-compatible server on the 3rd serial port. This server can be used to debug processes that are running, and when a process crashes the kernel will automatically halt the process for debugging.

When using the debugger, many features are supported:

- Listing processes on the system

- Attaching to a given process

- Listing threads

- Examining and updating memory

- Examining and updating registers

- Single-stepping (non-XIP processes)

- Inserting breakpoints (non-XIP processes)

The following features are NOT SUPPORTED:

- Watchpoints

- Inserting breakpoints in XIP processes

- Single-stepping XIP processes

Building a GDB-Compatible Image

For the toolchain, Xous has harmonized around the xpack gcc distribution, but other GCC distributions version of GDB (even those that target RV64) should work.

You probably want to set debug = true inside Cargo.toml. This will add debug symbols to the resulting ELF binaries which greatly enhance the debugging experience. You may also want to reduce the optimization level and turn off strip if it is set.

When running xtask to create images, the target processes you want to debug should not be XIP. XIP images run out of FLASH, which makes the code immutable and thus impossible for our debugger implementation to insert a breakpoint (our breakpoints are not hardware backed). The easiest way to do this is to use the app-image generator (instead of app-image-xip). However, if you've turned your optimizations to 0 and included debug symbols, it's possible this isn't an option because you'll run out of memory. In this case, you will need to modify app-image-xip to check for the target process name and toggle the flag on just that process to run out of RAM.

You will also need to pass --gdb-stub as an argument to xtask.

For example:

cargo xtask app-image --gdb-stub mtxchat --feature efuse --feature tls

Then, flash the resulting image to the target device as normal.

Attaching to the debugger (Renode)

If you're using Renode, then you can connect gdb to localhost:3456:

riscv-none-elf-gdb -ex 'tar ext :3456'

On Renode, port 3333 also exists, but it is useful mainly for debugging machine mode, i.e., when the hardware is in the loader or inside the kernel only.

3333is useful for when Xous itself has crashed, or when you're debugging the bootloader and Xous isn't even running. It's a stop-the-world debugger. Like "God Mode" on the Vex, where you really can do anything. Debugging there has no effect on the emulated world, so it's like stopping time and looking at things. This port also has no concept of processes or threads, so what process you're in is arbitrary every time you pause the debugger.3456is identical to what is presented on the hardware serial port (see next section). It's invasive, since processes will keep running when you attach but their timing will be skewed. It does, however, let you attach to a given process and get actual translated memory pages. With 3333 you kind of just hope that you don't have to deal with any MMU pages in a process, which is a nonissue as long as you're just debugging the kernel or bootloader.

Attaching to the debugger (Hardware)

On real hardware, you will first need to re-mux the serial port so that gdb is visible on serial. Then you can connect gdb to the target serial port.

For example, if you have a hardware Precursor device connected to a Raspberry Pi 3B+ with a debug HAT running Raspbian "Buster", you would first run this command in shellchat on the hardware device itself:

console app

This switches the internal serial port mux in the Precursor to the GDB port.

Then, on the Raspberry pi command line, you would run this:

riscv-none-elf-gdb -ex 'tar ext /dev/ttyS0'

Debugging a process

Within the gdb server, you can switch which file you're debugging. For example, to debug the ticktimer, run:

(gdb) file target/riscv32imac-unknown-xous-elf/release/xous-ticktimer

After setting the ELF file you will need to attach to the process. Use mon pr or monitor process to list available processes. Then, use attach to attach to a process:

(gdb) mon pr

Available processes:

1 kernel

2 xous-ticktimer

3 xous-log

4 xous-names

5 xous-susres

6 libstd-test

(gdb) att 2

Attaching to process 2

[New Thread 2.2]

[New Thread 2.3]

0xff802000 in ?? ()

(gdb)

You can switch processes by sending att to a different PID.

Debugging a thread

To list threads, use info thr:

(gdb) info thr

Id Target Id Frame

* 1 Thread 2.255 0xff802000 in ?? ()

2 Thread 2.2 xous::definitions::Result::from_args (src=...) at src/definitions.rs:474

3 Thread 2.3 xous::definitions::Result::from_args (src=...) at src/definitions.rs:474

(gdb)

To switch threads, use thr [n]:

(gdb) thr 2

[Switching to thread 2 (Thread 2.2)]

#0 xous::definitions::Result::from_args (src=...) at src/definitions.rs:474

474 match src[0] {

(gdb)

Important Note: GDB thread numbers are different from Xous thread numbers! GDB always starts with 1, but Xous may have any number of threads running. GDB pays attention to the first column, and you are most likely interested in the second column.

Renode Emulation

Renode is a multi-device emulator written in C#. It is designed to assist in testing and development of software, and is also useful in developing new hardware blocks.

The emulator is available for Windows, Mac, and Linux. It is designed to simulate whole systems of devices, meaning it can easily capture the interactions between devices on a network or bus. It allows you to pause the system and inspect memory, single-step, and watch various sections of the bus.

There is extensive end-user documentation available at Read the Docs, which is highly recommended. The remainder of this chapter will cover recommendations on how to use Renode with Xous.

Quickstart using the Renode emulator

Xous uses Renode as the preferred emulator, because it is easy to extend the hardware peripherals without recompiling the entire emulator.

Download Renode and ensure it is in your path.

Then, build Xous:

cargo xtask renode-image

This will compile everything in release mode for RISC-V, compile the tools required to package it all up, then create an image file.

Finally, run Renode and specify the xous-release.resc REnode SCript:

renode emulation/xous-release.resc

Renode will start emulation automatically, and will run the same set of programs as in "Hosted mode".

Network support

If you are seeking to emulate a Xous build with apps requiring network support, then there is some additional setup.

Renode accepts the Ethernet frames that the EC sends to the simulated WF200, and then dumps them onto a network TAP interface called renodetap.

It is probably best to prepare the TAP interface and associated configuration before the emulation:

- linux distro with Network Manager:

nmcli c add con-name renodetun type tun ifname renodetun mode tap ipv4.method shared ipv6.method ignore - OpenSuSE Aeon: also requires installation of dnsmasq

transactional-update pkg install dnsmasq

Start the emulation with:

renode emulation/xous-release-tap.resc

Once the emulation is up and running then connect to one of the WiFi APs Renode1 ... Renode9 (any password will work)

Writing Renode C# Peripherals

Renode is written in C#, which means it has access to the entire base of C#. One feature of C# is the CSharpCodeProvider object which provides the CompileAssemblyFromSource(CompilerParameters, string[]) function. This means that Renode has a runtime C# compiler built in.

You can include C# files in the Renode console or in your startup script to dynamically add new peripherals to your environment. Xous uses this extensively in Betrusted since the hardware peripherals are still under development and therefore change regularly. Updating a hardware module in Renode simply involves modifying the .cs file and restarting Renode. There is no additional compile step.

Setting up an IDE -- Visual Studio Code

It is highly recommended to use a full IDE. The Renode API can change, and it can take time to restart Renode to recompile your C# files. An IDE will provide you with tab-completion and will immediately tell you if there is a code error.

The core of Renode is written in a full IDE such as Visual Studio or Monodevelop. These IDEs expect a full Project file that defines a single target output -- for example an executable or a linked library. With our usage of C# there is no single target since Renode will dynamically load the source files. To work around this, we create a stub project file that tricks the IDE into loading our assembly files and providing autocomplete. We never actually use this project file, but it's used behind the scenes automatically.

Broadly speaking, there are three steps to setting up an IDE:

- Download Visual Studio Code

- Copy the reference project file

- Modify the reference project file

- Install the C# extension.

To begin with download Visual Studio Code. It is available for Windows, Linux, and Mac.

Next, copy emulation/peripherals.csproj.template to emulation/peripherals.csproj. This is a C# Project file that is understood by Visual Studio and Visual Studio Code. The file name peripherals.csproj is in the .gitignore file, so don't worry about accidentally checking it in.

Edit peripherals.csproj and modify RenodePath to point to your Renode installation where the .dll files are located. On Linux this is likely /opt/renode/bin. On Windows this may be in C:\Program Files\.

Finally, install the C# for Visual Studio Code extension. This extension will activate, parse your .csproj, and start providing autocomplete and compile suggestions.

Creating a new Peripheral

To create a new peripheral, simply copy an existing peripheral to a new filename under emulation/peripherals/, making sure the filename ends in .cs.

Many examples exist in the emulation/peripherals/ directory, and you can find many more examples built into Renode.

A simple example could be a device that provides random numbers:

using Antmicro.Renode.Core;

using Antmicro.Renode.Core.Structure.Registers;

using Antmicro.Renode.Logging;

namespace Antmicro.Renode.Peripherals.Miscellaneous

{

public class ExampleRNGServer : BasicDoubleWordPeripheral, IKnownSize

{

public long Size { get { return 0x100; } }

public GPIO IRQ { get; private set; }

private readonly PseudorandomNumberGenerator rng = EmulationManager.Instance.CurrentEmulation.RandomGenerator;

private bool enabled = true;

private enum Registers

{

CONTROL = 0x0,

DATA = 0x4,

STATUS = 0x8,

AV_CONFIG = 0xc,

RO_CONFIG = 0x10,

READY = 0xc4,

EV_STATUS = 0xc8,

EV_PENDING = 0xcc,

EV_ENABLE = 0xd0,

URANDOM = 0xdc,

URANDOM_VALID = 0xe0,

TEST = 0xf8,

}

public ExampleRNGServer(Machine machine) : base(machine)

{

this.IRQ = new GPIO();

DefineRegisters();

}

private void DefineRegisters()

{

Registers.URANDOM.Define(this)

.WithValueField(0, 32, FieldMode.Read, valueProviderCallback: _ =>

{

if (!enabled)

return 0;

return (uint)rng.Next();

}, name: "URANDOM");

Registers.DATA.Define(this)

.WithValueField(0, 16, FieldMode.Read, valueProviderCallback: _ =>

{

if (!enabled)

return 0;

return (uint)rng.Next();

}, name: "DATA")

.WithValueField(16, 16, FieldMode.Read, valueProviderCallback: _ =>

{

return 0xf00f;

}, name: "SIGNATURE");

Registers.URANDOM_VALID.Define(this)

.WithFlag(0, FieldMode.Read, valueProviderCallback: _ => { return true; }, name: "URANDOM_VALID")

.WithFlag(1, FieldMode.Read, valueProviderCallback: _ => { return enabled; }, name: "ENABLE");

Registers.CONTROL.Define(this)

.WithFlag(0, FieldMode.Write, writeCallback: (_, val) => { enabled = val; }, name: "ENABLE");

}

}

}

There's a lot to take in there, particularly if you've never dealt with C# before. Let's go over the module line-by-line.

using Antmicro.Renode.Core;

using Antmicro.Renode.Core.Structure.Registers;

using Antmicro.Renode.Logging;

The first three lines import various packages to the current namespace. You'll most likely use these in all of your projects. Any valid C# namespace may be used, including core .Net libraries. This can be useful if you need networking, cryptography, or other exotic libraries. There are many useful logging functions as well. You'll notice that the final line is darker than the other two. This is because this package is currently unused -- we don't perform any logging currently. You can safely remove this final line, however it's useful to leave Logging as an import because it allows for autocompletion of Logging functions.

namespace Antmicro.Renode.Peripherals.Miscellaneous {

Next, we define the namespace for this module. The module MUST be under a namespace Antmicro.Renode.Peripherals.xxx namespace. In this case, it is under Antmicro.Renode.Peripherals.Miscellaneous. This namespacing provides a handy structure to various peripherals.

public class ExampleRNGServer : BasicDoubleWordPeripheral, IKnownSize {

Finally, we begin to define our class. This class is named ExampleRNGServer, and it inherits BasicDoubleWordPeripheral and IKnownSize.

The BasicDoubleWordPeripheral class provides several convenience functions that makes it easy to create a memory-mapped device. It means we don't need to manage accessors, and we can simply worry about the register values themselves.

Peripherals need to have a known size, so we inform C# that our client has a known size. The I stands for Interface. To find out which functions we must implement to conform to IKnownSize, hold Ctrl and click on IKnownSize. It will take you to the definition of IKnownSize, located inside Emulator.dll. You will note that the only thing we need to implement is log Size { get; }, which means we only need to create an accessor for the property Size.

public long Size { get { return 0x100; } }

public GPIO IRQ { get; private set; }

private readonly PseudorandomNumberGenerator rng = EmulationManager.Instance.CurrentEmulation.RandomGenerator;

private bool enabled = true;

Here we define our local properties and variables. We can see the Size property defined here. Our peripheral goes up to 0xf8, so we return that as a constant. This is used by Renode to ensure peripherals don't overlap, and to know which peripheral to invoke when memory is accessed.

There is an IRQ here as well, which is a GPIO. The way Renode handles interrupts is by reusing GPIO pins. We can trigger an interrupt by setting this GPIO, and the system will invoke an interrupt context on the CPU.

Finally there is a local variable that is part of this object and not visible outside of our class.

private enum Registers

{

CONTROL = 0x0,

DATA = 0x4,

STATUS = 0x8,

AV_CONFIG = 0xc,

RO_CONFIG = 0x10,

READY = 0xc4,

EV_STATUS = 0xc8,

EV_PENDING = 0xcc,

EV_ENABLE = 0xd0,

URANDOM = 0xdc,

URANDOM_VALID = 0xe0,

TEST = 0xf8,

}

We define an enum called Registers. This is simply a mapping of register names to register numbers. It is not a particularly special enum, however correct naming of the enum values will make it easier to define the register set later on. It is standard practice to define all possible registers in this enum, even if you do not implement them right away.

public ExampleRNGServer(Machine machine) : base(machine)

{

this.IRQ = new GPIO();

DefineRegisters();

}

This is the constructor for our device. It takes a single argument of type Machine. Because we inherit from BasicDoubleWordPeripheral, we will need to call the constructor for the base class. To figure out what the constructor looks like, hold Ctrl and click on BasicDoubleWordPeripheral. We can see that the constructor for that class simply takes one argument that's a Machine. Therefore, the first line of our constructor should invoke the base constructor directly. Which is what we do here.

We create a new GPIO and assign it to the IRQ. Renode will access our IRQ property if it wants to watch for interrupts. If our peripheral has no interrupts we can omit the IRQ property.

private void DefineRegisters() {

Finally, we invoke the DefineRegisters() function. It is the most complicated function in this class, however it's where most of the work is done. Let's look at each register definition in order.

Registers.URANDOM.Define(this)

.WithValueField(0, 32, FieldMode.Read, valueProviderCallback: _ =>

{

if (!enabled)

return 0;

return (uint)rng.Next();

}, name: "URANDOM");

The Define(this) function comes from BasicDoubleWordPeripheralExtensions, which is one of the classes provided to us as a subclass of BasicDoubleWordPeripheral. It allows us to define a register on an enum type.

The WithValueField() function defines a value for a register across a range of values. In this case, we define a value beginning at bit 0 that is 32-bits wide. We define this register as a FieldMode.Read register, meaning writes will be ignored. When a device accesses this register, the valueProviderCallback function will be called.

What follows is a C# closure. The first argument is the register itself, which we ignore since we are not interested in it. Therefore, the variable is named _. If the block is disabled, we return 0, otherwise we return a uint from the class RNG provider.

Finally, we name the register URANDOM.

Registers.DATA.Define(this)

.WithValueField(0, 16, FieldMode.Read, valueProviderCallback: _ =>

{

if (!enabled)

return 0;

return (uint)rng.Next();

}, name: "DATA")

.WithValueField(16, 16, FieldMode.Read, valueProviderCallback: _ =>

{

return 0xf00f;

}, name: "SIGNATURE");

This register contains two value fields. The first is at offset 0, and is 16-bits wide. The second is at offset 16, and is also 16-bits wide.

The valueProviderCallback function is called for each field, which avoids the need for any manual bit shifting.

If the peripheral is not enabled, then the DATA field returns 0. If it is enabled, then it returns a 16-bit random value.

Because of the way this register is defined, the top 16 bits will always be 0xf00f. Therefore, the register's value will be either 0xf00f0000 or 0xf00fRAND.

Registers.URANDOM_VALID.Define(this)

.WithFlag(0, FieldMode.Read, valueProviderCallback: _ => { return true; }, name: "URANDOM_VALID")

.WithFlag(1, FieldMode.Read, valueProviderCallback: _ => { return enabled; }, name: "ENABLE");